Ensemble

Contents

- Introduction

- Ensemble of collaborative filtering models

- Ensemble of collaborative filtering and content filtering models

Introduction

In this part, we build ensemble estimators, which make predictions based on the predictions of base estimators. The goal here is to build an ensemble estimator, of which the performance is at least as good as that of the best base estimator, which help us avoid the trouble of making choices among base estimators. Such ensemble estimator would be very helpful, especially in the cases when there is variation in terms of the performance of base estimators, or in the cases where some data required for certain base estimators are not available (e.g., values of some key predictors are missing in the content filtering models).

To avoid overfitting, we train the ensemble estimators on the cross-validation set rather than the training set we used for the training of base estimators. We drop the base estimators, of which the $R^2$ score on the cross-validation set is lower than a threshold (we choose 0.05 in this case); we call the remaining base estimators as qualified base estimators.

Here we tried 3 strategies to build ensemble estimators (weighted average, Ridge regression, and random forest regressor).

We use Champaign dataset (20571 reviews, 878 restaurants, 8451 users) for demo purpose.

Note: the fitting time we report in this part does NOT include the fitting and prediction time of base estimators.

Ensemble of collaborative filtering models

First, we built ensemble estimators for the qualified collaborative filtering base estimators.

Weighted average

The first strategy is to make the ensemble predictions as the weighted averages of the qualified base estimators, where we use $R^2$ score on the cross-validation set as weights.

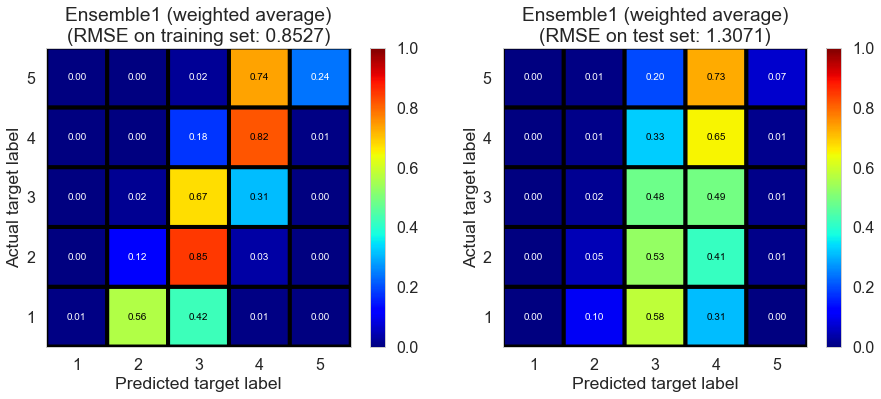

The result is shown below.

| model | fitting time (s) | train RMSE | test RMSE | train $R^2$ | test $R^2$ |

|---|---|---|---|---|---|

| Ensemble1 (weighted average) | 0.0 | 0.8527 | 1.3071 | 0.6454 | 0.1882 |

The performance of weighted average is fine.

Ridge regression

The second strategy is to perform a Ridge regression on the predictions of qualified base estimators.

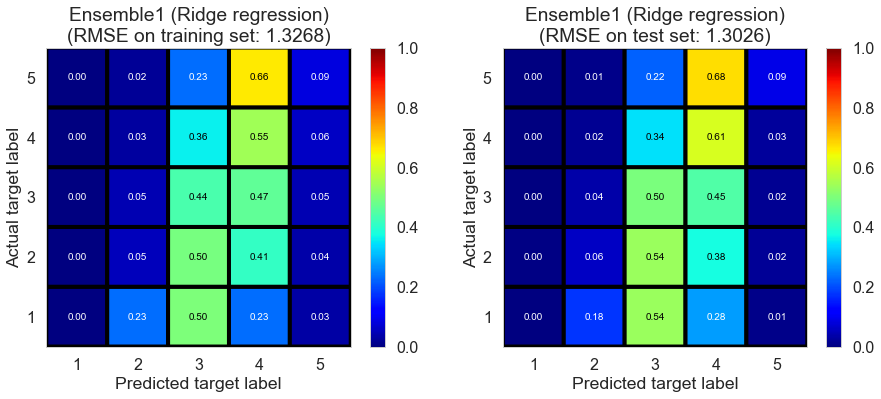

The result is shown below.

| model | fitting time (s) | train RMSE | test RMSE | train $R^2$ | test $R^2$ |

|---|---|---|---|---|---|

| Ensemble1 (Ridge regression) | 0.004 | 1.3268 | 1.3026 | 0.1413 | 0.1937 |

The performance on the training set is poor. We need to point out that the training set here is not “real” training set since we train the ensemble estimator on the cross-validation set.

Random forest

The third strategy is to train a random forest regressor on the predictions of qualified base estimators. We can use the best parameters determined by cross-validation.

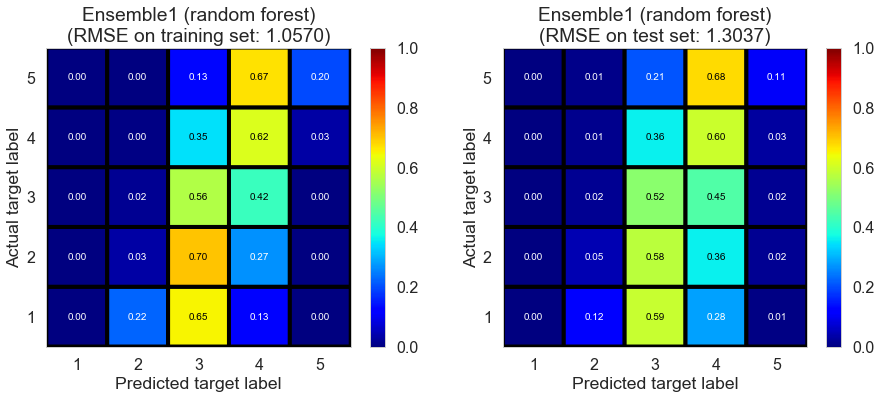

The result is shown below.

| model | fitting time (s) | train RMSE | test RMSE | train $R^2$ | test $R^2$ |

|---|---|---|---|---|---|

| Ensemble1 (random forest) | 0.215 | 1.057 | 1.3037 | 0.4551 | 0.1924 |

The performance on the training set seems to be better than that of Ridge regression.

Ensemble of collaborative filtering and content filtering models

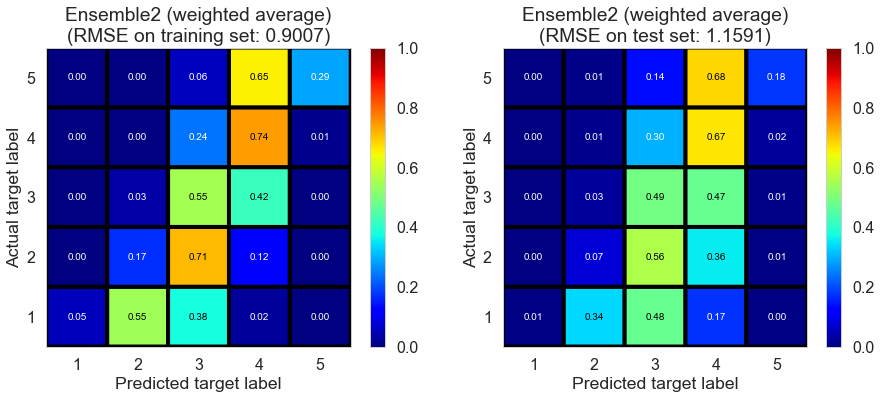

Weighted average

| model | fitting time (s) | train RMSE | test RMSE | train $R^2$ | test $R^2$ |

|---|---|---|---|---|---|

| Ensemble2 (weighted average) | 0.0 | 0.9007 | 1.1591 | 0.6043 | 0.3616 |

The performance on the test set drops slightly.

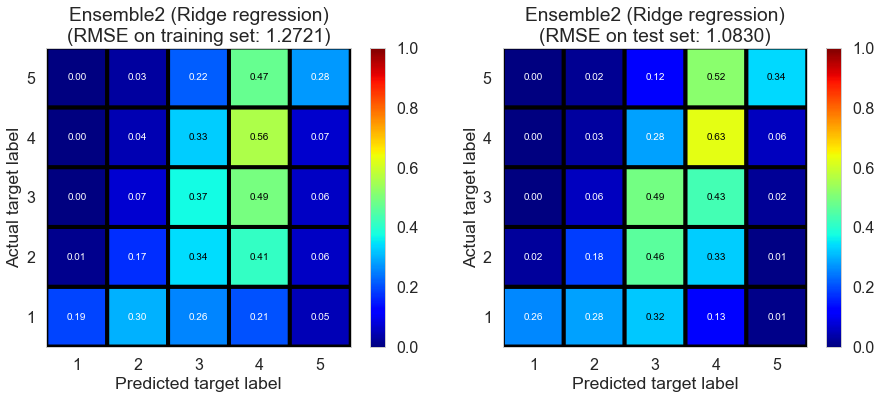

Ridge regression

| model | fitting time (s) | train RMSE | test RMSE | train $R^2$ | test $R^2$ |

|---|---|---|---|---|---|

| Ensemble2 (Ridge regression) | 0.01 | 1.2721 | 1.083 | 0.2107 | 0.4426 |

The performance on the training set is bad.

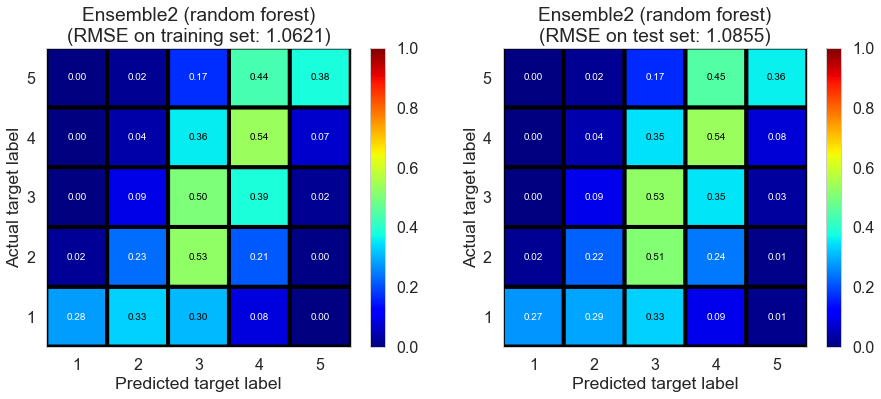

Random forest

| model | fitting time (s) | train RMSE | test RMSE | train $R^2$ | test $R^2$ |

|---|---|---|---|---|---|

| Ensemble2 (random forest) | 0.268 | 1.0621 | 1.0855 | 0.4497 | 0.4401 |

The performance is good on both training set and test set, indicating random forest regressor is suitable for building ensemble estimator in this case.