Collaborative Filtering

Contents

Introduction

Collaborative filtering predicts ratings based on past user behavior, which is characterized by previous ratings in this case. To perform collaborative filtering, we only need to use restaurant ratings from each user. We acquire data for this part by keeping 3 features in review table, user_id, business_id, and stars.

Collaborative filtering includes 2 primary areas, neighborhood methods and latent factor models. In this part, we tested several baseline models, neighborhood methods and latent factor models. We implemented some baseline models and latent factor models from scratch (by using numpy and scipy’s linear algebra toolkits instead of well-established recommender system packages); we implemented other algorithms by wrapping around methods in a recommender system python package, scikit-surprise. Each algorithm we implemented by using the scikit-surprise package is indicated by a * after its name.

Here, we use Champaign dataset (20571 reviews, 878 restaurants, 8451 users) for demo purpose.

We randomly split the reviews into 3 sets: a training set (60%), a cross-validation set (16%) and a test set (24%). We train base estimators on the training set, and test on the test set; cross-validation set is used for the training of ensemble estimators later.

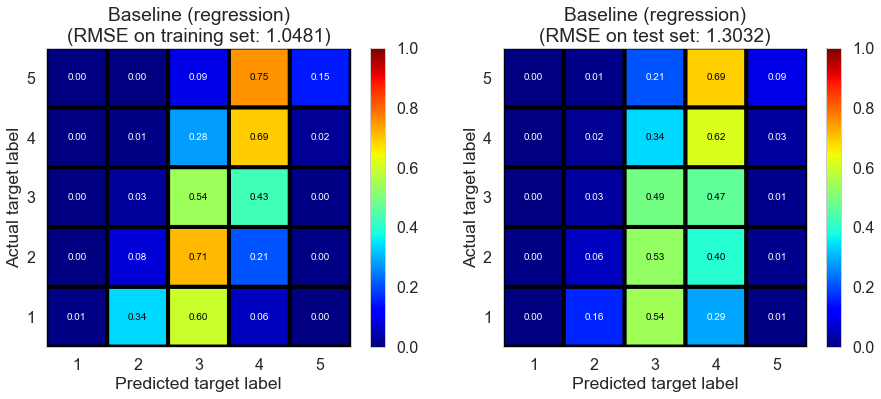

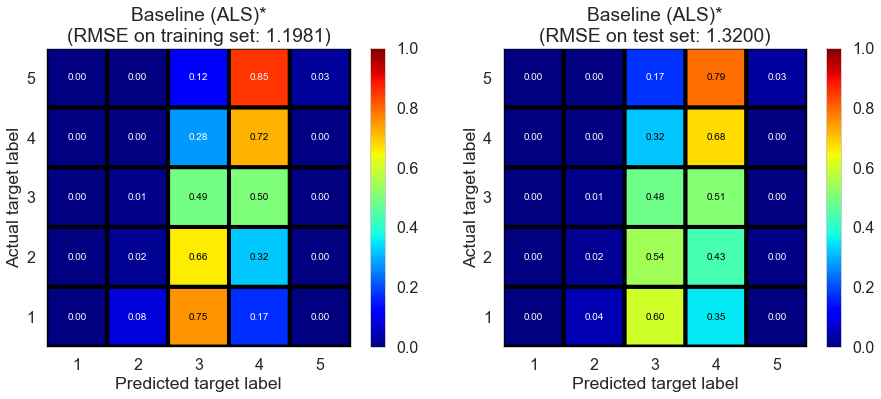

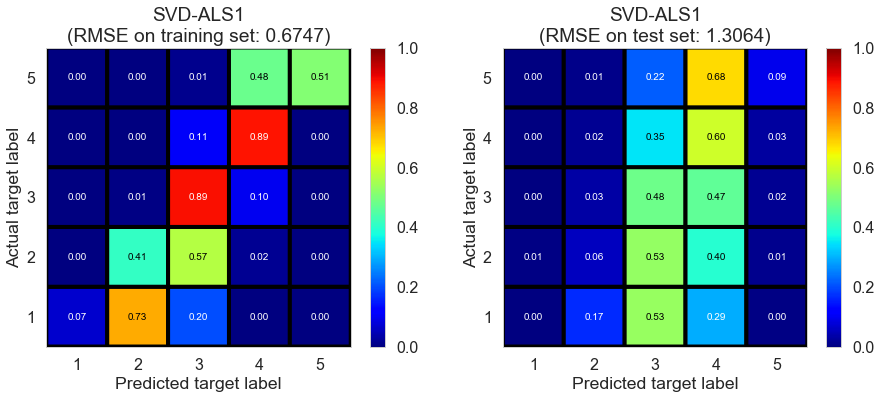

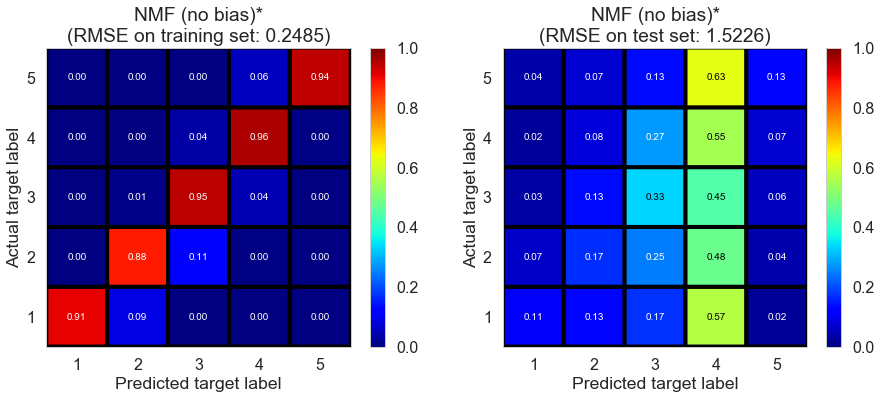

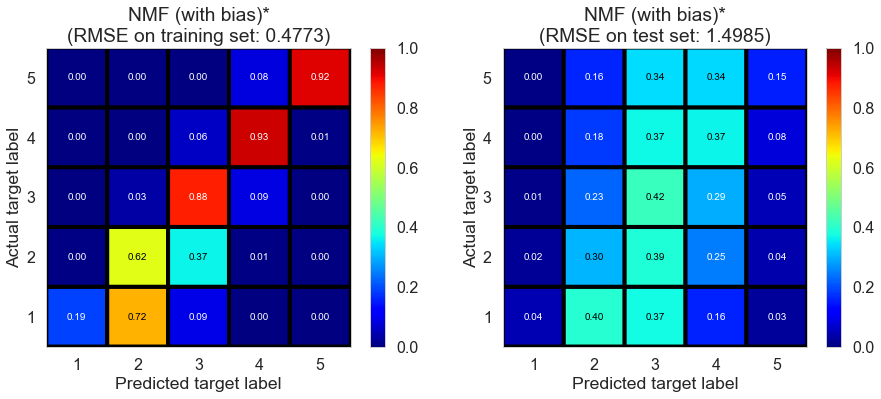

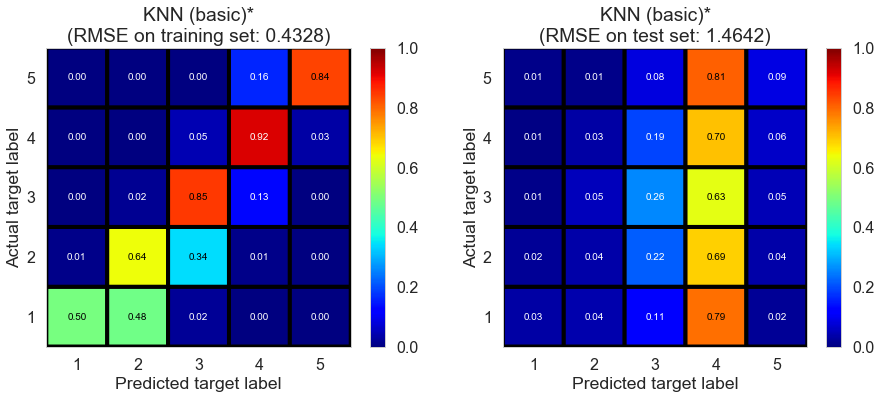

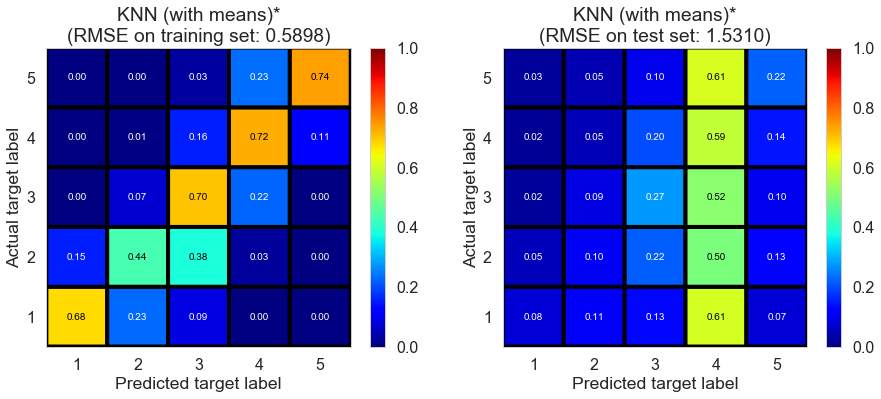

For each model, we report root mean square error (RMSE) and $R^2$ score on training set and test set. To gain some insight on model’s performance on different ratings, we round predicted ratings, where predicted ratings below 1 are rounded to 1 and predicted ratings above 5 are round to 5, and plot the confusion matrix of training set and test set (in a format used in a related work).

Baseline models

We first built some baseline models, including mode estimator, normal predictor*, baseline (mean), baseline (regression), and baseline (ALS)*.

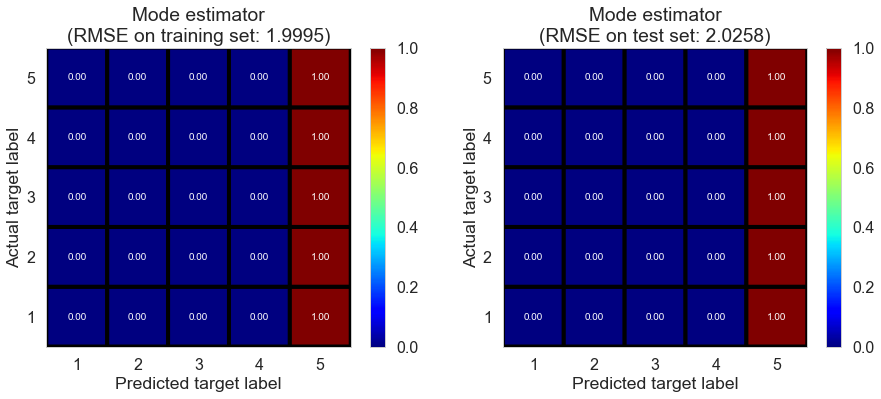

Mode estimator

Inspired by the fact that most ratings are 5 from EDA part, we build a mode estimator, which predicts every rating as the mode value of all ratings.

| model | fitting time (s) | train RMSE | test RMSE | train $R^2$ | test $R^2$ |

|---|---|---|---|---|---|

| Mode estimator | 0.0 | 1.9995 | 2.0258 | -0.9501 | -0.95 |

As we mentioned earlier, we could plot a confusion matrix to gain some insight on model’s performance on different ratings by rounding the predicted ratings, setting predictions below 1 to 1, above 5 to 5, and using a format used in a related work (the same below).

We plot the confusion matrix for training set on the left, and that for test set on the right (the same below).

The performance of mode estimator is poor as indicated by negative $R^2$, which is not surprising.

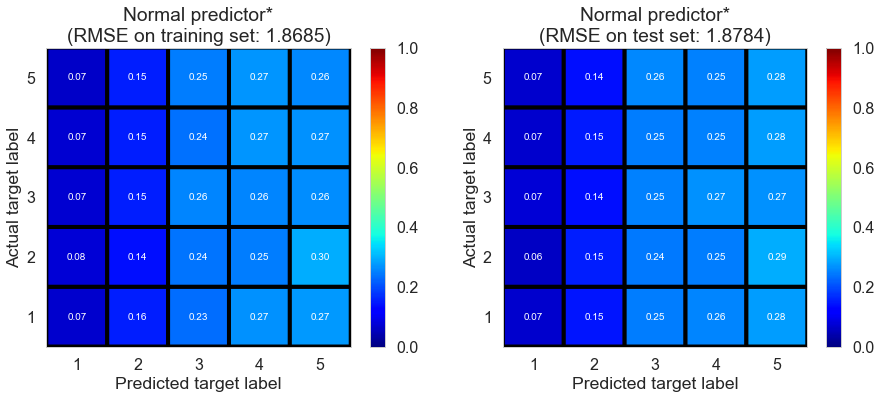

Normal predictor*

We implemented this algorithm by wrapping around NormalPredictor in the scikit-surprise package.

As described in the documentation,

Normal predictor* assumes the distribution of the training set to be normal, which doesn’t hold in this case. The prediction $\hat{r}_{ui}$ is generated from a normal distribution $\mathcal{N}(\hat{\mu}, \hat{\sigma}^2)$ where $\hat{\mu}$ and $\hat{\sigma}$ are estimated from the training data using the Maximum Likelihood Estimation:

The result is shown below.

| model | fitting time (s) | train RMSE | test RMSE | train $R^2$ | test $R^2$ |

|---|---|---|---|---|---|

| Normal predictor* | 0.051 | 1.8685 | 1.8784 | -0.7029 | -0.6767 |

The performance of normal predictor is also very poor since rating do NOT satisfy normal distribution (as shown in EDA).

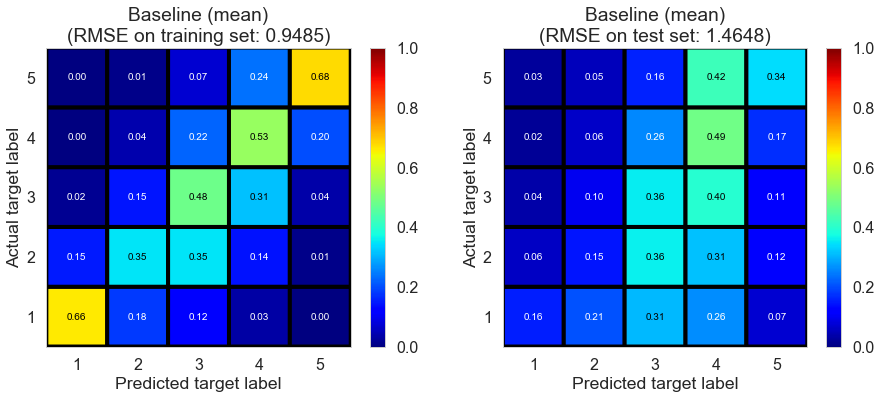

Baseline (mean)

Here we assume much of variation in observed ratings comes from effects associated with either users or items, known as biases (as assumed in most latent factor models). We estimate the ratings as:

In this model, we estimate biases with sample averages:

where $\bar{r}_u$ and $\bar{r}_i$ represent average ratings of user $u$ and restaurant $i$ repsectively, $b_u$ and $b_i$ represent the biases of user $u$ and restaurant $i$ repsectively from some intercept parameter $\mu$, which is estimated by the whole sample average in this case.

When we make predictions, if user $u$ is unknown, the bias $b_u$ is assumed to be zero; if the restaurant $i$ is unknown, the bias $b_i$ is assumed to be zero.

| model | fitting time (s) | train RMSE | test RMSE | train $R^2$ | test $R^2$ |

|---|---|---|---|---|---|

| Baseline (mean) | 0.021 | 0.9485 | 1.4648 | 0.5612 | -0.0195 |

Baseline (mean) performs well on training set; however, it suffers from overfitting as indicated by negative $R^2$ on test set, which is presumably due to the fact that there is no regularization in this model.

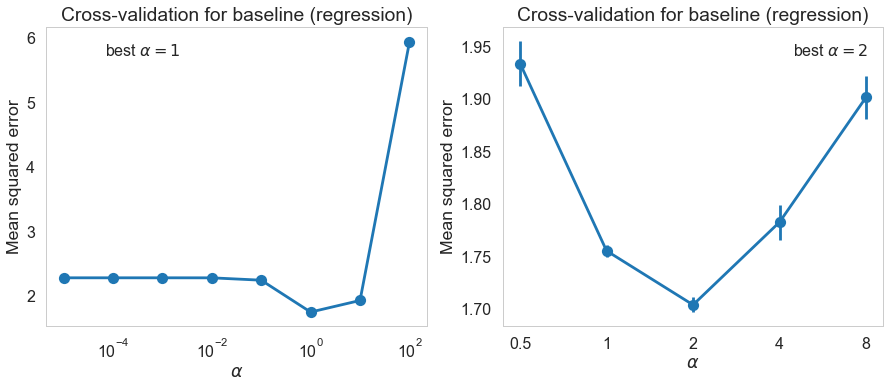

Baseline (regression)

Similarly, we estimate the ratings as:

In this model, we estimate biases of users and restaurants using regularized regression.

Specifically, we minimize the following regularized squared error:

We perform one-hot encoding on user_id and business_id, and store the corresponding design matrix as a sparse matrix. And we solve the Ridge regression algorithm using LSMR.

When we make predictions, if user $u$ is unknown, the bias $b_u$ is assumed to be zero; if the restaurant $i$ is unknown, the bias $b_i$ is assumed to be zero.

We performed 2 rounds of cross-validation to determine the best regularization term $\lambda$.

We can test the performance of baseline (regression) using the best regularization parameter determined by the cross-validation.

| model | fitting time (s) | train RMSE | test RMSE | train $R^2$ | test $R^2$ |

|---|---|---|---|---|---|

| Baseline (regression) | 0.027 | 1.0481 | 1.3032 | 0.4642 | 0.193 |

As we can see, baseline (regression) predicts most ratings to be 3 or 4. The performance of this model is significantly better than previous baseline models, as indicated by larger $R^2$.

Baseline (ALS)*

We implemented this algorithm by wrapping around BaselineOnly in the scikit-surprise package.

Here we also estimate ratings as:

And we minimize the same regularized squared error:

Rather than applying one-hot encoding on user_id and business_id simultaneously, alternating least squares (ALS), which is the default algorithm used in BaselineOnly, is used here for minimizing the regularized squared error.

When we make predictions, if user $u$ is unknown, the bias $b_u$ is assumed to be zero; if the restaurant $i$ is unknown, the bias $b_i$ is assumed to be zero.

We use the default parameters, and the result is shown below.

Estimating biases using als...

| model | fitting time (s) | train RMSE | test RMSE | train $R^2$ | test $R^2$ |

|---|---|---|---|---|---|

| Baseline (ALS)* | 0.061 | 1.1981 | 1.32 | 0.2998 | 0.1721 |

The performance on the test set is similar with baseline (regression), while it is worse on the training set, indicating the variance of the model is similar but the bias is higher.

Latent factor models

Here we tried several matrix factorization-based algorithms, including several different implementations of singular value decomposition (SVD) and non-negative matrix factorization (NMF).

SVD-ALS1

We first implemented sigular value decomposition via alternating least squares (SVD-ALS).

We estimate ratings as:

where $p_u$ represents the latent factors associated with user $u$, and $q_i$ represents the latent factors associated with restaurant $i$.

And we minimize the following regularized squared error:

Two widely used algorithms for solving the above problem are stochastic gradient descent (SGD) and alternating least squares (ALS).

We implement SVD-ALS1 as follows:

- Fit a baseline (regression) model with optimized regularization term to learn $\mu$, $b_u$, and $b_i$.

- Initialize $p_u$ by randomly generating values from a set of IID normal distributions with user defined

init_meanandinit_std. - In each iteration, we first solve regularized least squares problem for $q_i$ by fixing $p_u$, and then solve for $p_u$ by fixing $q_i$. Specifically, we don’t update biases $b_u$ or $b_i$ in each iteration in SVD-ALS1. We use LSMR to solve regularized least squares problem in each iteration.

When we make predictions, if user $u$ is unkown, then the bias $b_u$ and the factors $p_u$ are assumed to be zero; if restaurant $i$ is unknown, the bias $b_i$ and the factors $q_i$ are assumed to be zero.

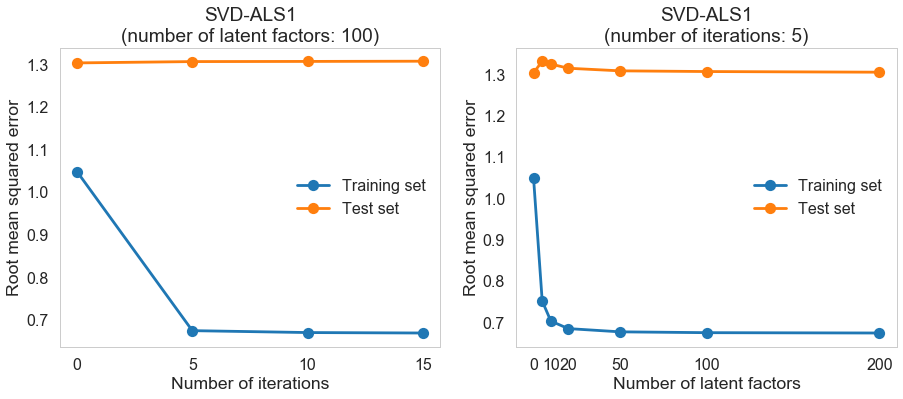

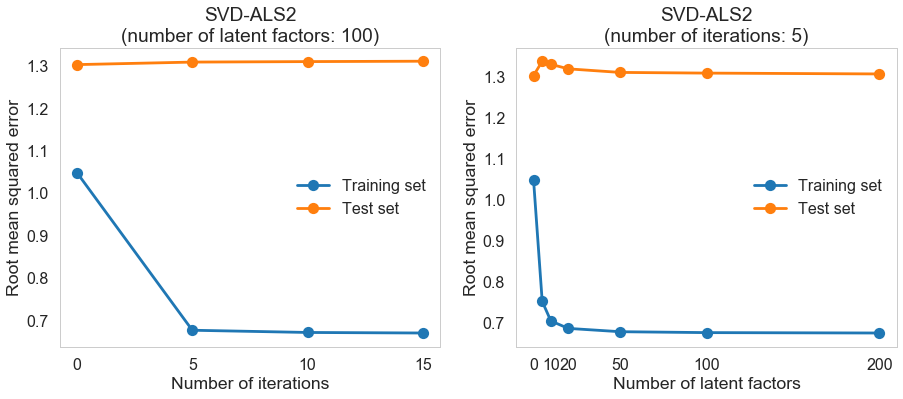

We can gain some intuition about the impact of the number of iterations and the number of latent factors on the training and test performance before determining the best parameters through cross-validation.

The performance on the training set improves significantly compared to baseline (regression) model when the number of iterations and the number of latent factors are not too small; the performance on the test set doesn’t change much.

We can determine the best parameters through cross-validation. And we can test the performance of the algorithm on our dataset.

| model | fitting time (s) | train RMSE | test RMSE | train $R^2$ | test $R^2$ |

|---|---|---|---|---|---|

| SVD-ALS1 | 12.3007 | 0.6747 | 1.3064 | 0.778 | 0.1891 |

The performance on the test set doesn’t change much but the performance on the training set improves significantly compared to baseline (regression) model. SVD-ALS1 significantly decreases the bias of the model without significantly increasing the variance.

SVD-ALS2

Different from SVD-ALS1, SVD-ALS2 updates biases $b_u$ or $b_i$ in each iteration.

We estimate ratings as:

where $p_u$ represents the latent factors associated with user $u$, and $q_i$ represents the latent factors associated with restaurant $i$.

And we minimize the following regularized squared error:

We implement SVD-ALS2 as follows:

- Fit a baseline (regression) model with optimized regularization term to learn $\mu$, $b_u$, and $b_i$.

- Initialize $p_u$ by randomly generating values from a set of IID normal distributions with user defined

init_meanandinit_std. - In each iteration, we first solve regularized least squares problem for $q_i$ and $b_i$ by fixing $p_u$ and $b_u$, and then solve for $p_u$ and $b_u$ by fixing $q_i$ and $b_i$. Specifically, we update biases $b_u$ or $b_i$ in each iteration in SVD-ALS2. We use LSMR to solve regularized least squares problem at each iteration.

When we make predictions, if user $u$ is unkown, then the bias $b_u$ and the factors $p_u$ are assumed to be zero; if restaurant $i$ is unknown, the bias $b_i$ and the factors $q_i$ are assumed to be zero.

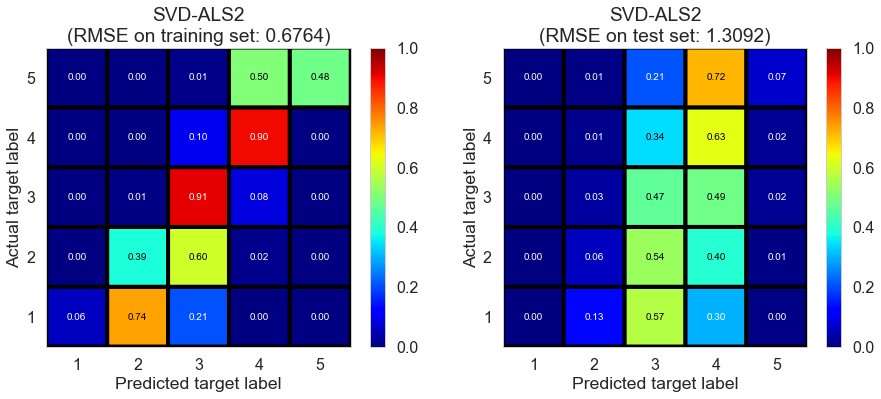

We can determine the best parameters through cross-validation. And we can test the performance of the algorithm on our dataset.

| model | fitting time (s) | train RMSE | test RMSE | train $R^2$ | test $R^2$ |

|---|---|---|---|---|---|

| SVD-ALS2 | 13.1128 | 0.6764 | 1.3092 | 0.7768 | 0.1855 |

The result of SVD-ALS2 is similar to that of SVD-ALS1, indicating updating biases in each iteration doesn’t have much impact on the model performance.

SVD-SGD*

We implemented this algorithm by wrapping around SVD in the scikit-surprise package.

Stochastic gradient descent (SGD) is another widely used algorithm for solving SVD problems.

We estimate ratings as:

where $p_u$ represents the latent factors associated with user $u$, and $q_i$ represents the latent factors associated with restaurant $i$.

And we minimize the following regularized squared error:

As described in documentation,

SVD-SGD* performs minization by stachastic gradient descent:

where $e_{ui} = r_{ui} - \hat{r}_{ui}$.

When we make predictions, if user $u$ is unkown, then the bias $b_u$ and the factors $p_u$ are assumed to be zero; if restaurant $i$ is unknown, the bias $b_i$ and the factors $q_i$ are assumed to be zero.

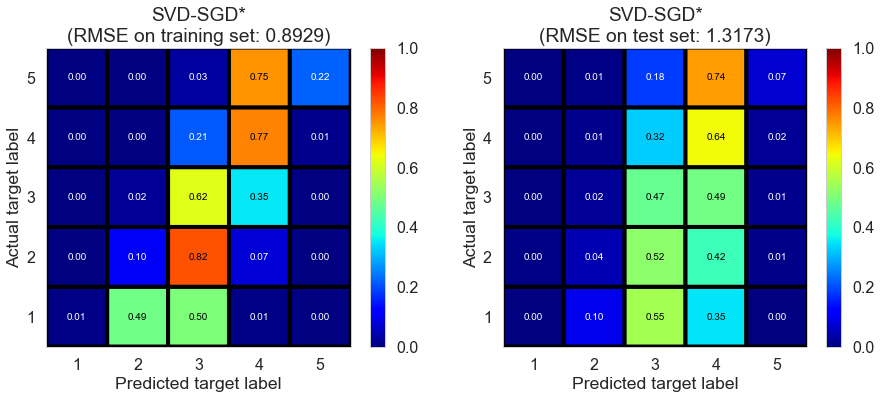

We use the default parameters, and the result is shown below.

| model | fitting time (s) | train RMSE | test RMSE | train $R^2$ | test $R^2$ |

|---|---|---|---|---|---|

| SVD-SGD* | 1.0001 | 0.8929 | 1.3173 | 0.6111 | 0.1754 |

The result of SVD-SGD* is similar to that of SVD-ALS1 and SVD-ALS2.

SVD++-SGD*

We implemented this algorithm by wrapping around SVDpp in the scikit-surprise package.

As described in documentation,

SVD++ algorithm takes into account implicit ratings. The prediction $\hat{r}_{ui}$ is set as:

where $y_i$ terms capture implicit ratings; an implicit rating describes the fact that a user $u$ rated a restaurant $j$, regardless of the rating value.

When we make predictions, if user $u$ is unkown, then the bias $b_u$ and the factors $p_u$ are assumed to be zero; if restaurant $i$ is unknown, $b_i$, $q_i$ and $y_i$ are assumed to be zero.

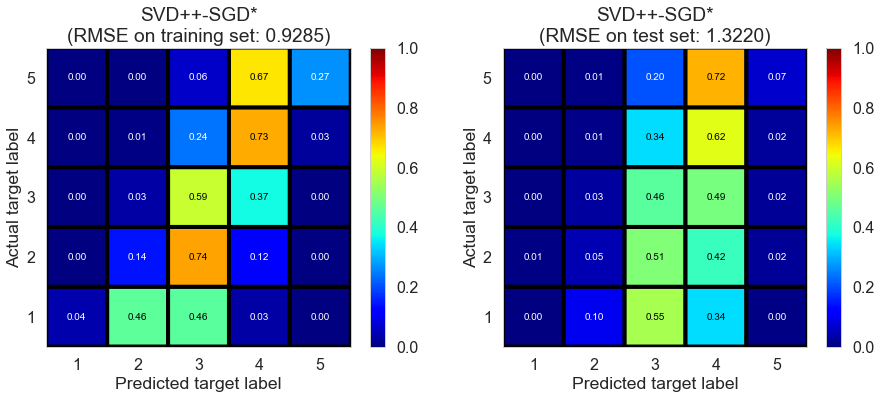

We use the default parameters, and the result is shown below.

| model | fitting time (s) | train RMSE | test RMSE | train $R^2$ | test $R^2$ |

|---|---|---|---|---|---|

| SVD++-SGD* | 3.3182 | 0.9285 | 1.322 | 0.5795 | 0.1695 |

The inclusion of implicit rating doesn’t seem to significantly change model performance.

NMF-SGD*

We implemented this algorithm by wrapping around NMF in the scikit-surprise package.

As described in the documentation,

NMF sets the prediction $\hat{r}_{ui}$ as:

where $p_u$ and $q_i$ are kept positive.

Each step of SGD updates $p_{uf}$ and $q_{if}$ as follows:

We use the default parameters, and the result is shown below.

| model | fitting time (s) | train RMSE | test RMSE | train $R^2$ | test $R^2$ |

|---|---|---|---|---|---|

| NMF (no bias)* | 1.2431 | 0.2485 | 1.5226 | 0.9699 | -0.1016 |

The model suffers from serious overfitting, partially because we doesn’t add bias term.

We can try the biased version of NMF, which sets the prediction as

The result is shown below.

| model | fitting time (s) | train RMSE | test RMSE | train $R^2$ | test $R^2$ |

|---|---|---|---|---|---|

| NMF (with bias)* | 1.1971 | 0.4773 | 1.4985 | 0.8889 | -0.067 |

It is better than the version without bias terms, but the model still suffers from overfitting. Although we could potentially address the issue by tuning regularization parameters, the result suggests it might not be a good idea to use NMF in this case.

Neighborhood methods

In this part, we tried some neighborhood methods available in the scikit-surprise package. Neighborhood methods don’t perform well in this dataset in general since a lot of users and restaurants don’t have any neighbors, making the models susceptible to overfitting.

k-NN (basic)*

We implemented this algorithm by wrapping around KNNBasic in the scikit-surprise package.

As described in the documentation,

k-NN (basic)* sets the prediction as:

or

We use the default parameters, and the result is shown below.

Computing the msd similarity matrix...

Done computing similarity matrix.

| model | fitting time (s) | train RMSE | test RMSE | train $R^2$ | test $R^2$ |

|---|---|---|---|---|---|

| KNN (basic)* | 1.0001 | 0.4328 | 1.4642 | 0.9086 | -0.0187 |

The model suffers from overfitting as we expected.

k-NN (with means)*

We implemented this algorithm by wrapping around KNNWithMeans in the scikit-surprise package.

As described in the documentation,

k-NN (with means)* takes into account the average ratings of each user, and sets the prediction as:

or

We use the default parameters, and the result is shown below.

Computing the msd similarity matrix...

Done computing similarity matrix.

| model | fitting time (s) | train RMSE | test RMSE | train $R^2$ | test $R^2$ |

|---|---|---|---|---|---|

| KNN (with means)* | 1.1161 | 0.5898 | 1.531 | 0.8303 | -0.1138 |

Still, the model suffers from overfitting as we expected.

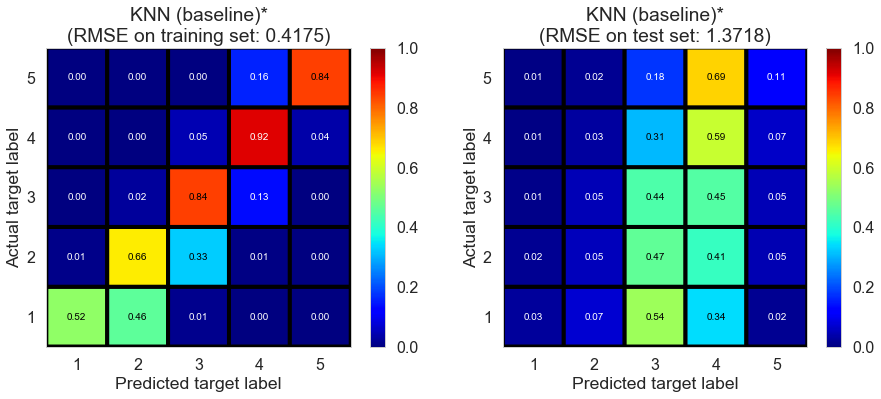

k-NN (baseline)*

We implemented this algorithm by wrapping around KNNBaseline in the scikit-surprise package.

As described in the documentation,

k-NN (baseline)* takes into account the baseline rating, and sets the prediction as:

or

We use the default parameters, and the result is shown below.

Estimating biases using als...

Computing the msd similarity matrix...

Done computing similarity matrix.

| model | fitting time (s) | train RMSE | test RMSE | train $R^2$ | test $R^2$ |

|---|---|---|---|---|---|

| KNN (baseline)* | 1.0251 | 0.4175 | 1.3718 | 0.915 | 0.1058 |

Although the model is still not as good as some latent factor models we tried ealier in terms of test performance, the variance of the model is much less than other k-NN models we tried, indicating the inclusion of baseline ratings is helpful.

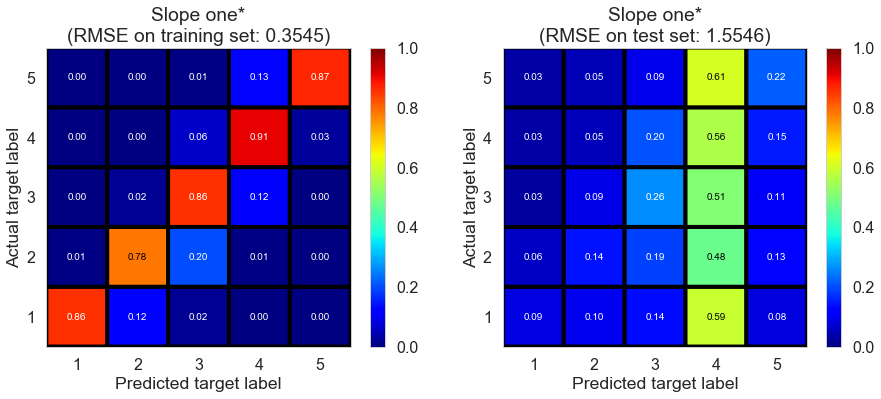

Slope one*

We implemented this algorithm by wrapping around SlopeOne in the scikit-surprise package.

As described in the documentation,

Slope one* sets the prediction as:

where $R_i(u)$ is the set of relevant restaurants, i.e. the set of restaurants $j$ rated by $u$ that also have at least one common user with $i$. $\text{dev}(i, j)$ is defined as the average difference between the ratings of $i$ and those of $j$:

We use the default parameters, and the result is shown below.

| model | fitting time (s) | train RMSE | test RMSE | train $R^2$ | test $R^2$ |

|---|---|---|---|---|---|

| Slope one* | 0.158 | 0.3545 | 1.5546 | 0.9387 | -0.1484 |

The model suffers from overfitting as we expected.

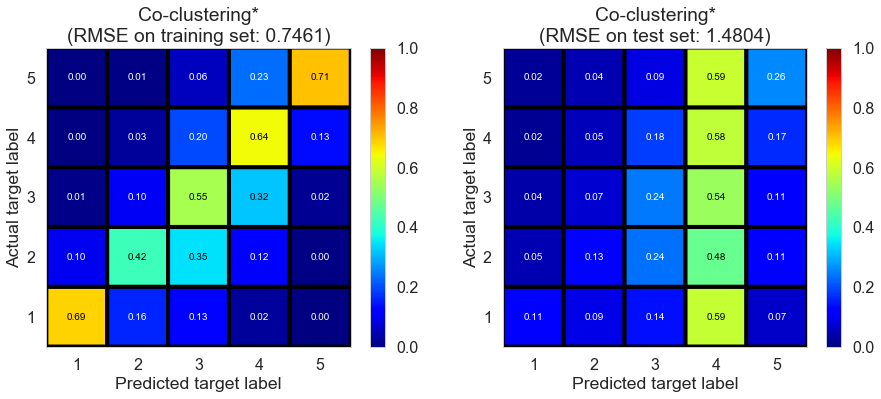

Co-clustering*

We implemented this algorithm by wrapping around CoClustering in the scikit-surprise package.

As described in the documentation,

Co-clustering sets the prediction as:

where $\overline{C_{ui}}$ is the average rating of co-cluster $C_{ui}$, $\overline{C_u}$ is the average rating of $u$’s cluster, and $\overline{C_i}$ is the average rating of $i$’s cluster.

We use the default parameters, and the result is shown below.

| model | fitting time (s) | train RMSE | test RMSE | train $R^2$ | test $R^2$ |

|---|---|---|---|---|---|

| Co-clustering* | 1.2651 | 0.7461 | 1.4804 | 0.7285 | -0.0414 |

The model suffers from overfitting as we expected.